CentOSにHadoopをインストール

Hadoop徹底入門を購入したので、早速CentOSにHadoopをインストールしてみる。

最新は、hadoop-0.21.0だが、Pigが現在Hadoop-0.20系しかサポートしていないみたいなので、hadoop-0.20.2を入れる

JVMのインストール

- パッケージをここからダウンロード

- インストール

$ chmod 755 jre-6u25-linux-i586-rpm.bin

$ sudo ./jre-6u25-linux-i586-rpm.bin [sudo]

Unpacking...

Checksumming...

Extracting...UnZipSFX 5.50 of 17 February 2002, by Info-ZIP (Zip-Bugs@lists.wku.edu).

inflating: jre-6u25-linux-i586.rpm 準備中... ########################################### [100%]

1:jre ########################################### [100%]

Unpacking JAR files...

rt.jar...

jsse.jar... charsets.jar...

localedata.jar...

plugin.jar...

javaws.jar...

deploy.jar...

Done.

$ export JAVA_HOME=/usr/java/latest $ sudo -e /etc/bashrc # 以下を追記 export JAVA_HOME=/usr/java/latest $ echo $JAVA_HOME /usr/java/latest

Hadoop用ユーザとグループの設定

$ sudo /usr/sbin/groupadd -g 1000 $ sudo /usr/sbin/useradd -g hadoop -u 1000 -p hadoop hadoop $ id hadoop uid=1000(hadoop) gid=1000(hadoop) 所属グループ=1000(hadoop) context=user_u:system_r:unconfined_t

Hadoopパッケージの展開と配置

$ tar zxvf hadoop-0.20.2.tar.gz $ sudo mv hadoop-0.20.2 /usr/local/ $ sudo chown -R hadoop:hadoop /usr/local/hadoop-0.20.2 $ sudo ln -s /usr/local/hadoop-0.20.2 /usr/local/hadoop $ export HADOOP_HOME=/usr/local/hadoop $ sudo -e /etc/bashrc ... export HADOOP_HOME=/usr/local/hadoop # 追記

- conf/core-site.xmlの編集

$ diff -u core-site.xml.bk $HADOOP_HOME/conf/core-site.xml --- core-site.xml.bk 2011-04-26 09:10:31.000000000 +0900 +++ /usr/local/hadoop/conf/core-site.xml 2011-04-26 09:13:30.000000000 +0900 @@ -4,5 +4,13 @@ <!-- Put site-specific property overrides in this file. --> <configuration> + <property> + <name>hadoop.tmp.dir</name> + <value>/hadoop</value> + </property> + <property> + <name>fs.default.name</name> + <value>hdfs://localhost:54310</value> + </property> </configuration>

$ diff -u hdfs-site.xml.bk $HADOOP_HOME/conf/hdfs-site.xml--- hdfs-site.xml.bk 2011-04-26 09:16:38.000000000 +0900

+++ /usr/local/hadoop/conf/hdfs-site.xml 2011-04-26 09:19:11.000000000 +0900

@@ -4,5 +4,12 @@

<!-- Put site-specific property overrides in this file. -->

<configuration>

-

+ <property>

+ <name>dfs.name.dir</name>

+ <value>${hadoop.tmp.dir}/dfs/name</value>

+ </property>

+ <property>

+ <name>dfs.data.dir</name>

+ <value>${hadoop.tmp.dir}/dfs/data</value>

+ </property>

</configuration>

- conf/mapred-site.xmlの編集

$ diff -u mapred-site.xml.bk $HADOOP_HOME/conf/mapred-site.xml--- mapred-site.xml.bk 2011-04-26 09:20:41.000000000 +0900

+++ /usr/local/hadoop/conf/mapred-site.xml 2011-04-26 09:22:15.000000000 +0900

@@ -4,5 +4,12 @@

<!-- Put site-specific property overrides in this file. -->

<configuration>

-

+ <property>

+ <name>mapred.job.tracker</name>

+ <value>localhost:54311</value>

+ </property>

+ <property>

+ <name>mapred.local.dir</name>

+ <value>${hadoop.tmp.dir}/mapred</value>

+ </property> </configuration>

- conf/hadoop-env.shの編集

$ diff -u hadoop-env.sh.bk $HADOOP_HOME/conf/hadoop-env.sh--- hadoop-env.sh.bk 2011-04-26 09:23:30.000000000 +0900

+++ /usr/local/hadoop/conf/hadoop-env.sh 2011-04-26 09:26:18.000000000 +0900

@@ -32,6 +32,7 @@

# Where log files are stored. $HADOOP_HOME/logs by default.

# export HADOOP_LOG_DIR=${HADOOP_HOME}/logs

+export HADOOP_LOG_DIR=/var/log/hadoop

# File naming remote slave hosts. $HADOOP_HOME/conf/slaves by default.

# export HADOOP_SLAVES=${HADOOP_HOME}/conf/slaves

@@ -46,9 +47,11 @@

# The directory where pid files are stored. /tmp by default.

# export HADOOP_PID_DIR=/var/hadoop/pids

+export HADOOP_PID_DIR=/var/run/hadoop

# A string representing this instance of hadoop. $USER by default.

# export HADOOP_IDENT_STRING=$USER

+export HADOOP_IDENT_STRING=sample

# The scheduling priority for daemon processes. See 'man nice'.

# export HADOOP_NICENESS=10

Hadoop用ディレクトリ設定

上で設定した内容に従って、Hadoopに関するディレクトリを作成

$ sudo mkdir /hadoop $ sudo mkdir /var/log/hadoop $ sudo mkdir /var/run/hadoop $ sudo chmod 777 /hadoop[yokkuns@localhost dl]$ sudo chown -R hadoop:hadoop /hadoop $ sudo chown -R hadoop:hadoop /var/log/hadoop $ sudo chown -R hadoop:hadoop /var/run/hadoop

SSH公開鍵の配布

$ sudo su hadoop $ ssh-keygen -t rsa -P "" Generating public/private rsa key pair. Enter file in which to save the key (/home/hadoop/.ssh/id_rsa): Created directory '/home/hadoop/.ssh'. Your identification has been saved in /home/hadoop/.ssh/id_rsa. Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub. The key fingerprint is: 4b:dd:b8:74:66:8a:41:f6:76:17:6e:ae:61:de:cf:71 hadoop@localhost.localdomain $ ls ~/.ssh/ id_rsa id_rsa.pub $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys $ chmod 600 ~/.ssh/authorized_keys

NameNodeのフォーマット

$ $HADOOP_HOME/bin/hadoop namenode -format 11/04/26 09:42:05 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNodeSTARTUP_MSG: host = localhost.localdomain/127.0.0.1 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 0.20.2 STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.20 -r 911707; compiled by 'chrisdo' on Fri Feb 19 08:07:34 UTC 2010 ************************************************************/ 11/04/26 09:42:05 INFO namenode.FSNamesystem: fsOwner=hadoop,hadoop 11/04/26 09:42:05 INFO namenode.FSNamesystem: supergroup=supergroup 11/04/26 09:42:05 INFO namenode.FSNamesystem: isPermissionEnabled=true 11/04/26 09:42:05 INFO common.Storage: Image file of size 96 saved in 0 seconds. 11/04/26 09:42:05 INFO common.Storage: Storage directory /hadoop/dfs/name has been successfully formatted. 11/04/26 09:42:05 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at localhost.localdomain/127.0.0.1 ************************************************************/

HDFS関係のノードの起動

$ $HADOOP_HOME/bin/start-dfs.sh starting namenode, logging to /var/log/hadoop/hadoop-sample-namenode-localhost.localdomain.outThe authenticity of host 'localhost (127.0.0.1)' can't be established. RSA key fingerprint is 34:37:28:4c:1a:23:ce:e6:fc:b2:a1:ef:a1:35:b7:0f. Are you sure you want to continue connecting (yes/no)? yes localhost: Warning: Permanently added 'localhost' (RSA) to the list of known hosts. localhost: starting datanode, logging to /var/log/hadoop/hadoop-sample-datanode-localhost.localdomain.out localhost: starting secondarynamenode, logging to /var/log/hadoop/hadoop-sample-secondarynamenode-localhost.localdomain.out

MapReduce関係のノードの起動

$ $HADOOP_HOME/bin/start-mapred.sh starting jobtracker, logging to /var/log/hadoop/hadoop-sample-jobtracker-localhost.localdomain.out localhost: starting tasktracker, logging to /var/log/hadoop/hadoop-sample-tasktracker-localhost.localdomain.out

Hadoopクラスタ起動の確認

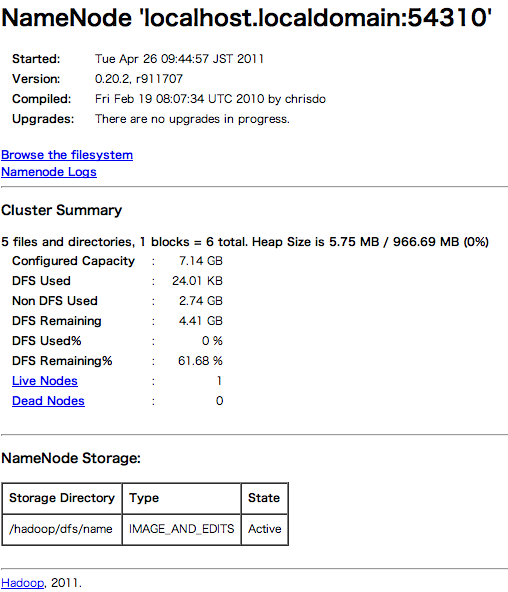

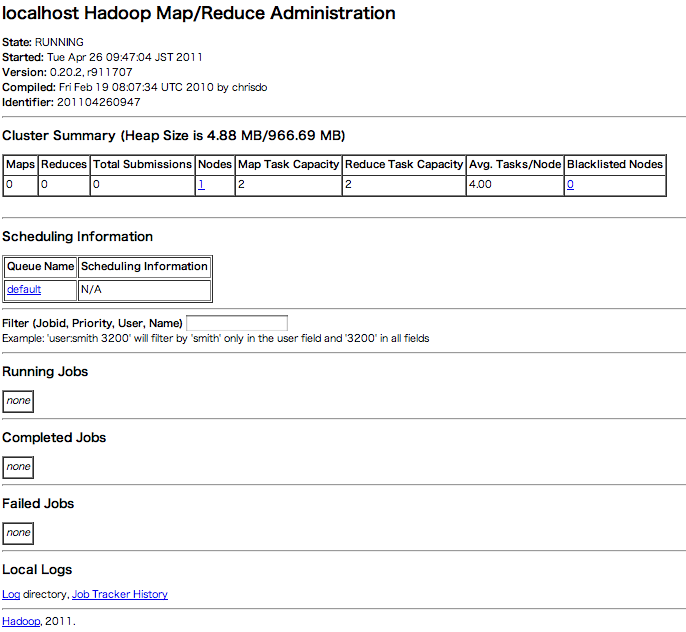

Webインタフェースにより確認する

- HDFSの状態の確認

http://localhost:50070

- MapReduceの状態の確認

http://localhost:50030

MapReduceの実行

Hadoopパッケージには、MapReduceの動作確認用に、いくつかのサンプルが用意されている。

今回は、モンテカルロ法により円周率を計算する、「pi」というアプリケーションを実行してみる。

$ cd $HADOOP_HOME $ ./bin/hadoop jar hadoop-0.20.2-examples.jar pi 4 10000 Number of Maps = 4 Samples per Map = 10000 Wrote input for Map #0 Wrote input for Map #1Wrote input for Map #2 Wrote input for Map #3Starting Job 11/04/26 10:04:34 INFO mapred.FileInputFormat: Total input paths to process : 4 11/04/26 10:04:35 INFO mapred.JobClient: Running job: job_201104260947_0001 11/04/26 10:04:36 INFO mapred.JobClient: map 0% reduce 0% 11/04/26 10:04:51 INFO mapred.JobClient: map 50% reduce 0% 11/04/26 10:05:01 INFO mapred.JobClient: map 100% reduce 0% 11/04/26 10:05:17 INFO mapred.JobClient: map 100% reduce 100% 11/04/26 10:05:19 INFO mapred.JobClient: Job complete: job_201104260947_0001 11/04/26 10:05:20 INFO mapred.JobClient: Counters: 18 11/04/26 10:05:20 INFO mapred.JobClient: Job Counters 11/04/26 10:05:20 INFO mapred.JobClient: Launched reduce tasks=1 11/04/26 10:05:20 INFO mapred.JobClient: Launched map tasks=4 11/04/26 10:05:20 INFO mapred.JobClient: Data-local map tasks=4 11/04/26 10:05:20 INFO mapred.JobClient: FileSystemCounters 11/04/26 10:05:20 INFO mapred.JobClient: FILE_BYTES_READ=94 11/04/26 10:05:20 INFO mapred.JobClient: HDFS_BYTES_READ=472 11/04/26 10:05:20 INFO mapred.JobClient: FILE_BYTES_WRITTEN=334 11/04/26 10:05:20 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=215 11/04/26 10:05:20 INFO mapred.JobClient: Map-Reduce Framework 11/04/26 10:05:20 INFO mapred.JobClient: Reduce input groups=8 11/04/26 10:05:20 INFO mapred.JobClient: Combine output records=0 11/04/26 10:05:20 INFO mapred.JobClient: Map input records=4 11/04/26 10:05:20 INFO mapred.JobClient: Reduce shuffle bytes=112 11/04/26 10:05:20 INFO mapred.JobClient: Reduce output records=0 11/04/26 10:05:20 INFO mapred.JobClient: Spilled Records=16 11/04/26 10:05:20 INFO mapred.JobClient: Map output bytes=72 11/04/26 10:05:20 INFO mapred.JobClient: Map input bytes=96 11/04/26 10:05:20 INFO mapred.JobClient: Combine input records=0 11/04/26 10:05:20 INFO mapred.JobClient: Map output records=8 11/04/26 10:05:20 INFO mapred.JobClient: Reduce input records=8 Job Finished in 45.508 seconds Estimated value of Pi is 3.14140000000000000000